MiniMax M2.5 is Very Good

Feb 14, 2026

Ziva is an AI Agent that makes games in Godot. When MiniMax M2.5 released , we were skeptical of the benchmaxxing, but added it to our services anyways.

The results suggested benchmaxxing, but were still so good for the price. This is a glimpse into future inference costs.

How We Test Models

We run our own eval sets. One eval is a 3-prompt Mario world benchmark:

- Create a tileset, tilemap, and Mario-like world with ground, floating platforms, and gaps

- Generate animated player and enemy sprites, add them to the world

- Write movement scripts (WASD + jump for the player, patrol AI for the enemy), attach them, make sure everything works on the platforms

Sample Data

| Claude Haiku 4.5 | Gemini 3 Flash | MiniMax M2.5 | |

|---|---|---|---|

| Cost | $0.34 | $0.06 | $0.16 |

| Steps | 30 | 16 | 36 |

| Duration | ~4.5 min | ~2 min | ~5 min |

| All 3 phases completed | Yes | No | Yes |

| Working movement + jump | Yes | No | Yes |

| Working enemy AI | Yes | No | Yes |

Gemini 3 Flash was the cheapest at $0.06, but it only completed about 40% of the task — it created a world and sprites but never wrote a single line of GDScript. By the third prompt, it had lost track of what it had already built and started recreating sprites instead of writing scripts.

MiniMax completed everything. World with three tile types (pipe, brick, question block), animated player with WASD + space jump, mushroom enemy with patrol AI, all collisions working. At $0.16, that’s 2x cheaper than Haiku for comparable quality.

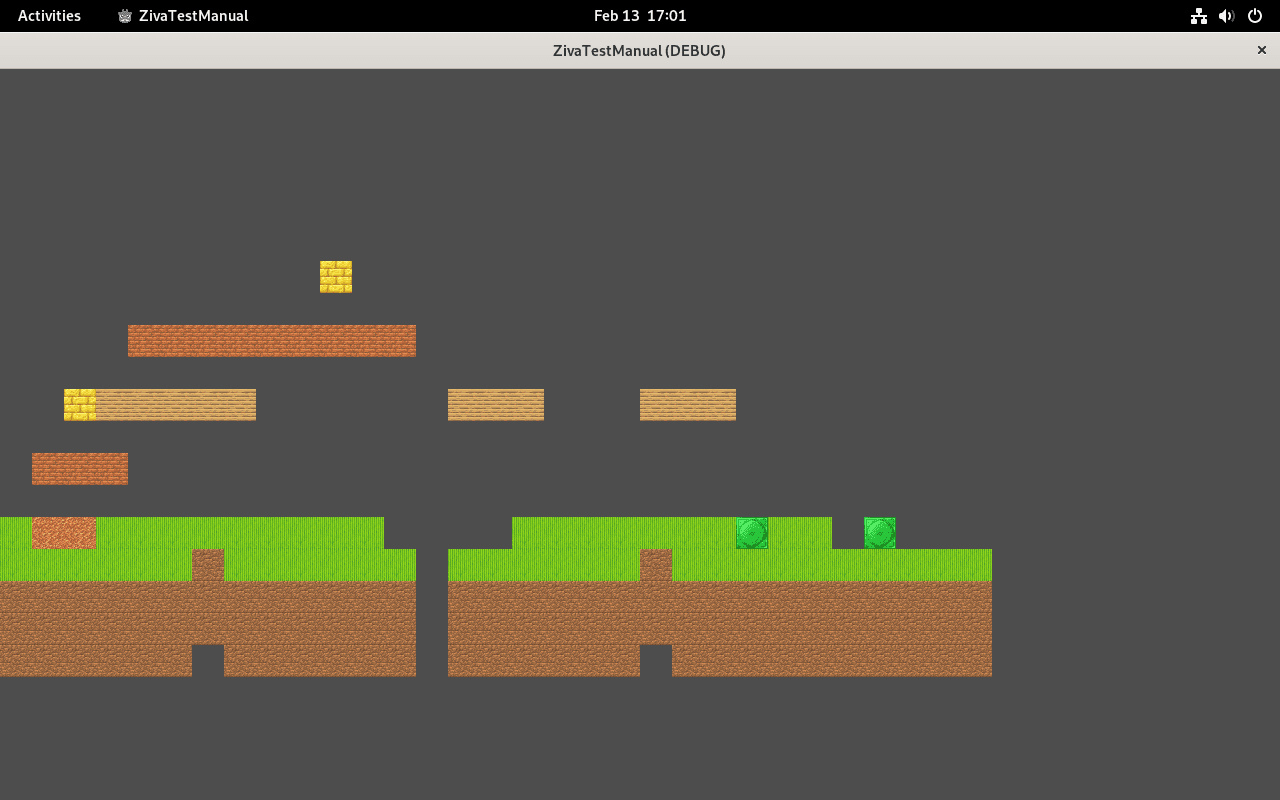

MiniMax M2.5 ($0.16) — three distinct tile types, floating platforms, player and enemy with working scripts

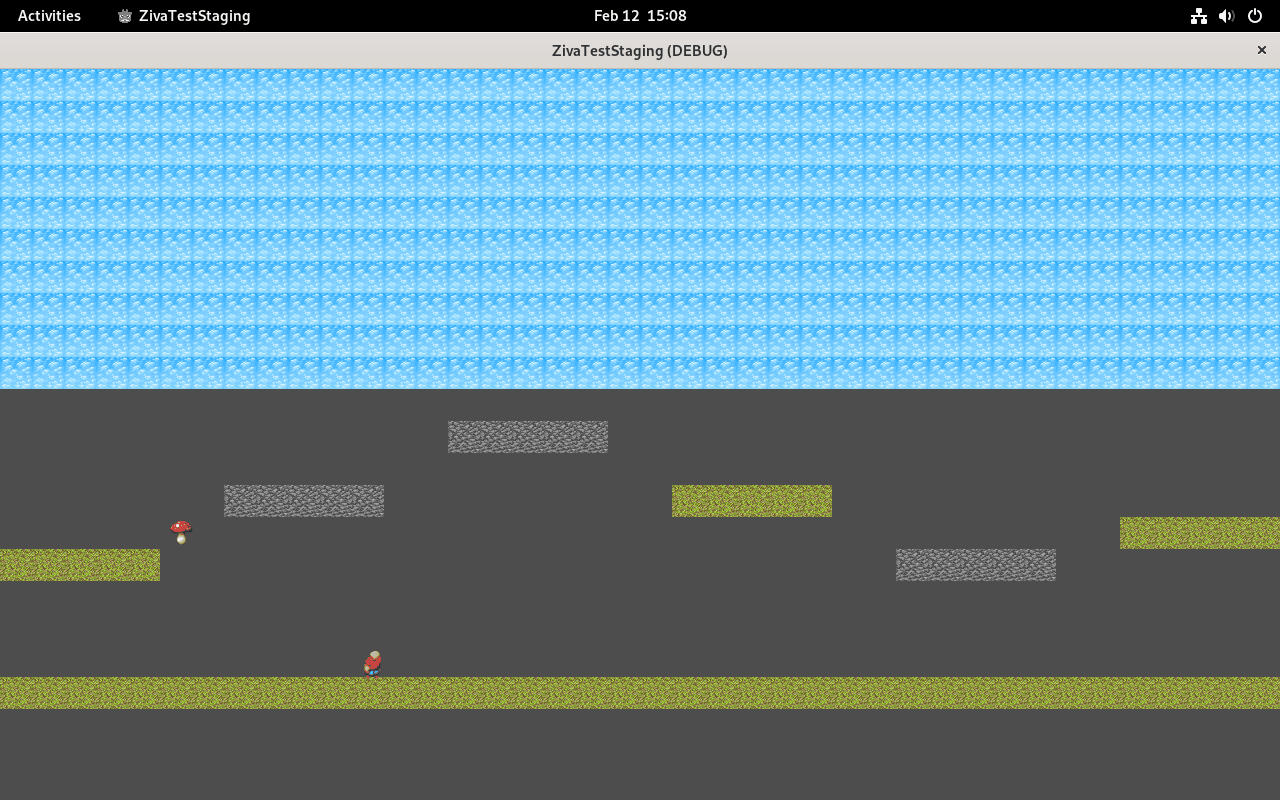

Claude Haiku 4.5 ($0.34) — sky background, grass and stone platforms, player and mushroom enemy with scripts

Extrapolating to our most capable model, Claude Sonnet 4.5 — which costs roughly 10x what Haiku does on this benchmark — MiniMax is delivering similar task completion at a fraction of the price.

The Downsides

What’s stopping us from setting this as the default model:

- MiniMax is slow. At ~5 minutes for our benchmark, it’s the slowest model we offer. It also needs to think a lot to get the results (99% of output tokens were reasoning).

- Data retention is unclear. Through the Vercel AI gateway, none of the MiniMax providers guarantee zero data retention or promise they won’t train on user data. For reference, Anthropic (Claude) and Google (Gemini) models all make this guarantee through their APIs. OpenAI (ChatGPT) doesn’t make a zero retention guarantee, but promise in their privacy policy to not train on user data.

Prediction

Inference costs continue dropping. This is just the latest datapoint. It’s also awesome to see labs still publishing self hostable models; if the West won’t do it, I for one welcome our new Chinese model overlords!

Dan Ginovker - Founder + CEO